Azure Kubernetes Services (AKS). How to secure your AKS cluster after Pod Security Policy removal

Hello Folks,

In this AKS series post I would like to talk about how to secure your AKS cluster after the Pod Security Policy removal from Kubernetes.

1. PSP Deprecation

Those of you who constantly work with Kubernetes and tracks release changelog probably have heard that Pod Security Policies (PSP) deprecated as of Kubernetes v1.21, and will be completely removed in v1.25. PSP is a cluster-level resource that controls security sensitive aspects of the pod specification. The PodSecurityPolicy objects defines a set of conditions that a pod must run with in order to be accepted into the system, as well as defaults for the related fields. With PSP you can deny such thing like privileged pods, run container as a root, force read only root file system etc.

PSP deprecation for cluster admins means that we need to prepare our K8S infrastructure to be able to control security context of pods and containers which will run on our clusters. There are bunch of different projects which may help to achieve the same (or even more) that we can to achieve with PSP. In my opinion there are two projects Kyverno and OPA GateKeeper which are most valuable in this area. Both of this solutions are open source CNCF projects and provides great alternatives to PSP.

2. Kyverno or OPA Gatekeeper

Both Kyverno and OPA GateKeeper runs as admission controllers in Kubernetes they receives validating and mutating admission webhook HTTP callbacks from the API server and applies matching policies to return results that enforce admission policies or reject requests.

Both policy managers can validate (check that resource is match specified criteria), mutate (edit resource based on specified criteria), however only Kyverno can generate new resources based on specified conditions and this quite valuable feature as it can automate a lot of things. As example you can create Kyverno policy according to which each time when new namespace will be created additionally Kyverno will create a network policy, resource quota or whatever resource you need.

Another key deference between GateKeeper and Kyverno is the way how policies are written.

GateKeeper uses REGO language to describe the policy constraint templates. In AKS if you use Azure Policies aka GateKeeper a lot of built in policies are already available and you can use them without knowledge of REGO, but if you want to create your own custom policy you will need to understand how to write with REGO and this might be tricky if your policy is complex.

Kyverno policies are much more simple because the described in yaml format as any other kubernetes resources. Sure you still need to understand basic rules on how Kyverno policies should be written and which spec’s are supported in policy definition files. You can use kubectl explain clusterpolicy | policy in your terminal to see what are the supported spec’s for Kyverno policies or cluster policies.

Here is how the policy which requires environment label to be set on each namespace looks in OPA GateKeeper

In GateKeeper we first need to specify a constraint template where we describe our policy rules:

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

spec:

crd:

spec:

names:

kind: K8sRequiredLabels

validation:

# Schema for the `parameters` field

openAPIV3Schema:

properties:

labels:

type: array

items:

type: string

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}

After this we need to create a constraint for this template. Constraint used to describe for which kubernetes resources our constraint template will be applied.

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: pod-must-have-gk

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

labels: ["environment"]

The following yaml shows how the same policy looks in Kyverno:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-labels

spec:

validationFailureAction: audit

background: true

rules:

- name: check-for-labels

match:

resources:

kinds:

- Namespace

validate:

message: "The label `environment` is required."

pattern:

metadata:

labels:

environment: "?*"

As you can see Kyverno policy looks much more simple and easier to understand compared to GateKeeper.

These two aspects are the main reason why my choice was Kyverno instead of Azure Policy + OPA GateKeeper. Also it’s worth to mention that if you wish you can use both policy managers in parallel but if your AKS clusters are large with a lot of requests to the API this may add additional load for control plane and cause a delays during various operations with resources. This is because a lot of API requests (depends for which resource kind policies will be applied) will be forwarded to and processed by Kyverno and GateKeeper admissions controller before action.

If you would like to see super detailed comparison between Kyverno and OPA Gatekeeper I recommend to read this Kyverno vs OPA Gatekeeper comparison post.

3. Protect AKS with Azure Policy for Kubernetes aka OPA Gatekeeper

If you want to secure your AKS cluster with Azure Policy for Kubernetes you should enable Azure Policy for Kubernetes addon for AKS. To enable this it run the following command:

az aks enable-addons --addons azure-policy-aks --resource-group <resource-group> --name <cluster-name>

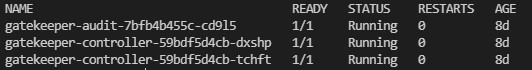

After the addon will be enabled you will see an additional Gatekeeper pods running on your AKS cluster gatekeeper namespace. These pods runs as admission controllers and they are responsible for enforcing policies on your cluster.

Next you can create Azure policy initiative (contains several policy definitions) and make assignment to your subscriptions or resource group. There are several built-in azure policy initiatives already created for you:

-

Kubernetes cluster pod security baseline standards for Linux-based workloads - Kubernetes cluster pod security baseline standards for Linux-based workloads

-

Kubernetes cluster pod security restricted standards for Linux-based workloads - Kubernetes cluster pod security restricted standards for Linux-based workloads

These two initiatives contains policies which can help to secure your cluster according some Kubernetes Pod Security Standards and they also can cover the same as PSP does:

- Restrict privileged containers

- Restrict Using Host Network

- Restrict Using Host PID

- Restrict Capabilities

- Restrict Some Volume Types (e.g. HostPath)

- Restrict privilege escalation

- Restrict SecComp Profiles

- Restrict Root User

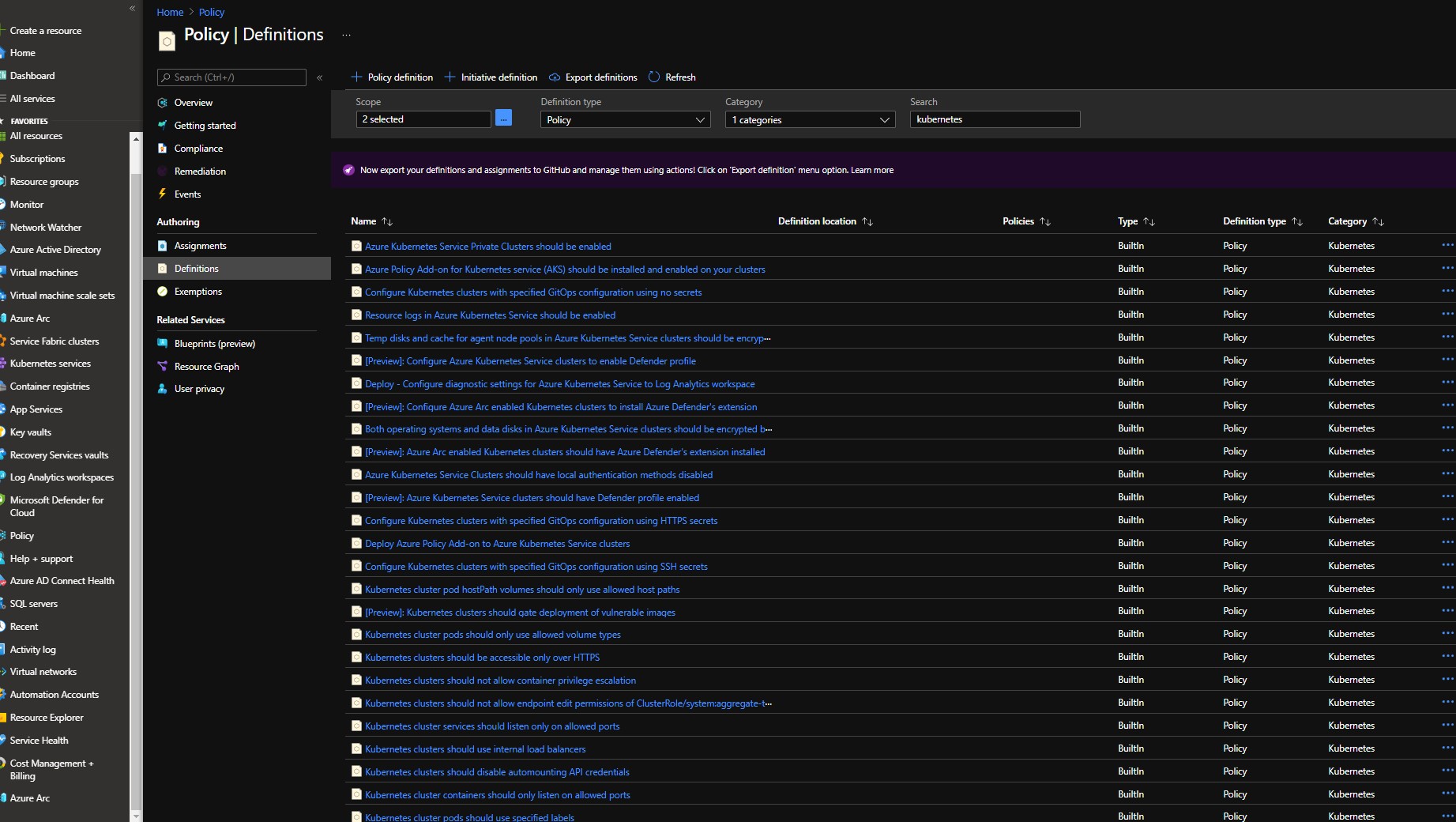

There are also a lot of other built-in policies which can be found in Azure Policy built-in definitions for Azure Kubernetes Service documentation.

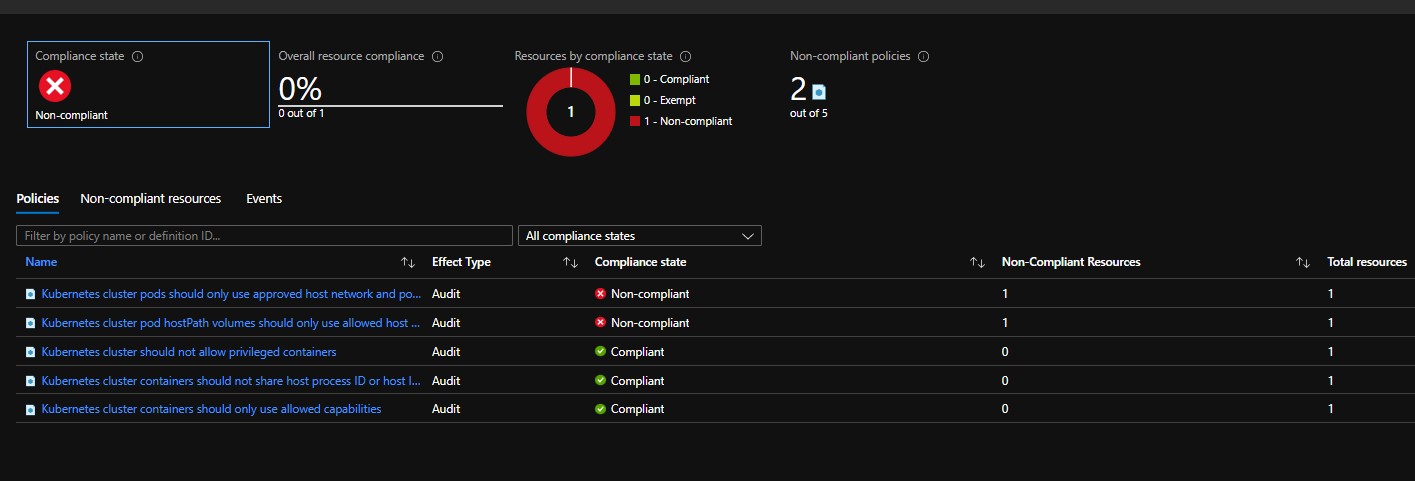

These policies may help you to not only secure your cluster but also to enforce some kubernetes or AKS specific standards like requiring a specific label on each namespace or enforce using internal load balancer. Each policy can be set in three different modes:

Enforce- Enforce the policy.Warn- Warn if the policy is violated.Audit- Audit the policy.

Policy assignment process is the same as for any other Azure policy. You choose the policy or policy initiative and assign it to your subscription/resource group/resource.:

az policy assignment create --name <policy-assignment-name> --policy <policy-name> --display-name <policy-assignment-display-name> --resource-group <resource-group>

Built-in Azure Policies for Kubernetes are written in such way that you can add exclusions or assign your values via parameters.

IF your case is not covered by built-in AKS policies it also possible to create your own custom policy for Azure Kubernetes Service. This functionality is currently in Preview Create and Assign Custom Policy for Azure Kubernetes Service, and also looks for me a bit to complicated to implement compared to Kyverno.

Additional disadvantage is that policy assignment or update can take up to 20 minutes to sync in to each cluster. And this can be a problem in case if you will need to quickly fix some behavior.

Microsoft Defender for Cloud and Azure Advisor uses same Built Azure Policy for Kubernetes in order to evaluate your clusters for potential security issues and misconfigurations.

You can review how compliant your cluster with assigned policies right in the Azure portal under Azure Policy Compliance blade.:

4. Protect AKS with Kyverno

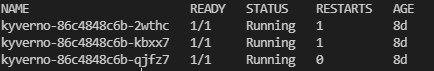

If your choose to use Kyverno to secure and manage your AKS cluster you need to deploy it by using official Kyverno Helm chart. You can find official Kyverno Installation guide here or simply run the following commands against your AKS cluster:

helm repo add kyverno https://kyverno.github.io/kyverno/

helm repo update

helm install kyverno kyverno/kyverno --namespace kyverno --create-namespace

Refer to Kyverno Helm Chart Configuration if you want to adjust some parameters.

After successful installation of Kyverno you will see a new Kubernetes deployment pods running on your AKS cluster.

So now you can use Kyverno to enforce policies on your AKS cluster.

Unlike Azure Policy for Kubernetes with Kyverno you can create a policies not only to validate or mutate resources, but also policies which will generate/create new k8s resources based on specified rules. Here for example a policy which creates a network policy for each new namespace:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: add-networkpolicy

annotations:

policies.kyverno.io/title: Add Network Policy

policies.kyverno.io/category: Multi-Tenancy

policies.kyverno.io/subject: NetworkPolicy

policies.kyverno.io/description: >-

By default, Kubernetes allows communications across all Pods within a cluster.

The NetworkPolicy resource and a CNI plug-in that supports NetworkPolicy must be used to restrict

communications. A default NetworkPolicy should be configured for each Namespace to

default deny all ingress and egress traffic to the Pods in the Namespace. Application

teams can then configure additional NetworkPolicy resources to allow desired traffic

to application Pods from select sources. This policy will create a new NetworkPolicy resource

named `default-deny` which will deny all traffic anytime a new Namespace is created.

spec:

rules:

- name: default-deny

match:

resources:

kinds:

- Namespace

generate:

kind: NetworkPolicy

name: default-deny

namespace: ""

synchronize: true

data:

spec:

# select all pods in the namespace

podSelector: {}

# deny all traffic

policyTypes:

- Ingress

- Egress

There are already more than 90 different policies in Kyverno Policies Library so you can use all these examples and adopt for your use case. All the policies are quite simple and easy to understand as they are written in yaml format. And you can template them and deploy with helm as any other kubernetes resource.

Additionally to Kyverno Policies Library Kyverno has official kyverno-policies helm chart which contains policies to cover a Kubernetes Pod Security Standards generally these policies covers same aspects as a Pod Security Policies or Azure Kubernetes Pod Security Policies initiatives. You can find all information about Kyverno Policies Helm Chart here.

Currently you can configure only few global settings in kyverno-policies chart and actually can’t granularly configure particular policies in one place via helm configuration file. So I decided to create my own Kyverno Policies Helm Chart and adopt it specifically for Azure Kubernetes Service. Currently my aks-kyverno-policies helm chart contains more than 20 policies which might be useful to secure and ease your AKS cluster management. They will help to cover a PSP deprecation, restrict LB service to internal only, control allowed container registries, control labels etc. Also helps to automate creation of such things as limit ranges, network policies, etc. Main advantage of this helm chart is that you can configure all policies in one place trough helm configuration file. You can enable or disable policies, set exclusions, set how policy will be applied if it’s a policy which generates resources you can set resource specification. You can review all configurable settings here. This approach will let you easily standardize your policies and adjust them for different environments by simple edit of your helm config file.

In order to install aks-kyverno-policies helm chart perform the following steps:

Note: It’s recommended to first deploy the policies in audit mode policies.*.validationFailureAction: audit to make sure that everything is working as expected and not breaking existing deployments

helm repo add sysadminas https://sysadminas.eu/helm-charts/ # Add sysadminas Helm Chart Repository

helm repo update # Update Helm Chart Repositories

In case you satisfied with default settings run the following:

helm upgrade aks-kyverno-policies sysadminas/aks-kyverno-policies --namespace kyverno -i # Run if you satisfied with default settings, probably you will need to change some settings based on your needs

In case if you would like to change some settings you can use the following:

helm pull sysadminas/aks-kyverno-policies --untar # Pull Helm Chart and unpack it to local directory and adjust values in helm config file

helm upgrade aks-kyverno-policies aks-kyverno-policies --namespace kyverno -i # Run after you adjusted values in helm config file or pass your own values file with -f option

I will constantly update this helm chart with new policies which might be useful in AKS environment. All the contributions are welcome.

If you would like to write your own policy I would suggest to use Kyverno Policies Library as an example reference and use a Writing Policies Documentation which will help you to understand the basic principles of writing policies for Kyverno.

It also worth to mention that Kyverno has it’s own cli which can be used to test policies against your AKS cluster before you deploy them. You can find it here. And it also useful if you deploy the policies with CI/CD pipeline.

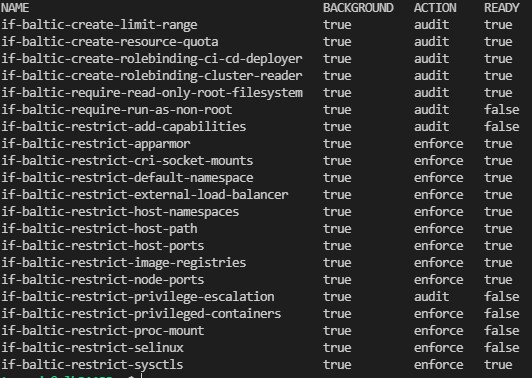

To review all the installed kyverno policies in your AKS cluster run the following:

kubectl get clusterpolicy

Kyverno policy can be a cluster wide (cluster policy) or a namespace wide (policy). Similar as K8S cluster roles and roles. Currently aks-kyverno-policies contains only cluster wide policies however you can easily exclude any namespace from policy evaluation by adding it to the excludedNamespaces list in the helm config file.

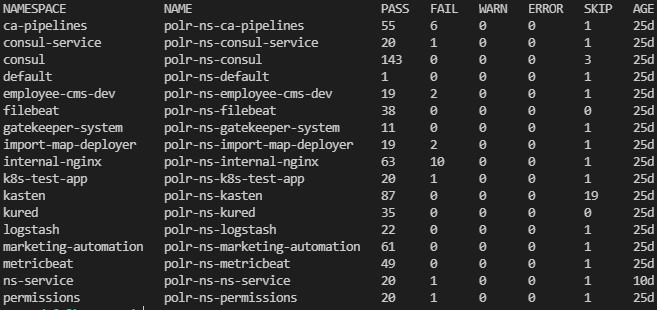

Kyverno also generates cluster policy reports and policy reports in order to allow you to review the policy evaluation results.

kubectl get policyreport -A

Note: Even if you deploy a cluster policy in most cases you will need to review a policy report as policy report contains evaluation results for namespaced K8S resources.

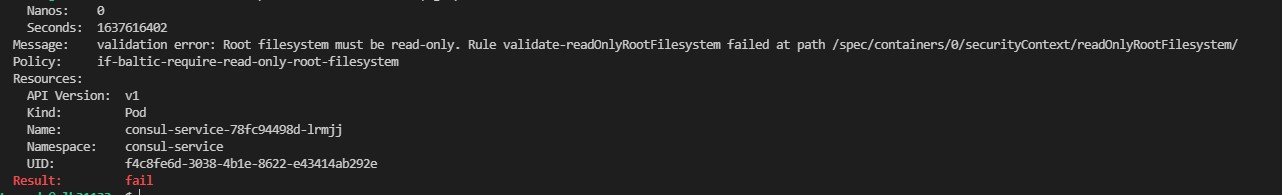

If you would like to review which policies are with failed validation status you can run the following:

kubectl describe polr -A | grep -i "Result: \+fail" -B10 # Review all failed policies

kubectl describe polr -n <namespace> | grep -i "Result: \+fail" -B10 # Review failed policies for specific namespace

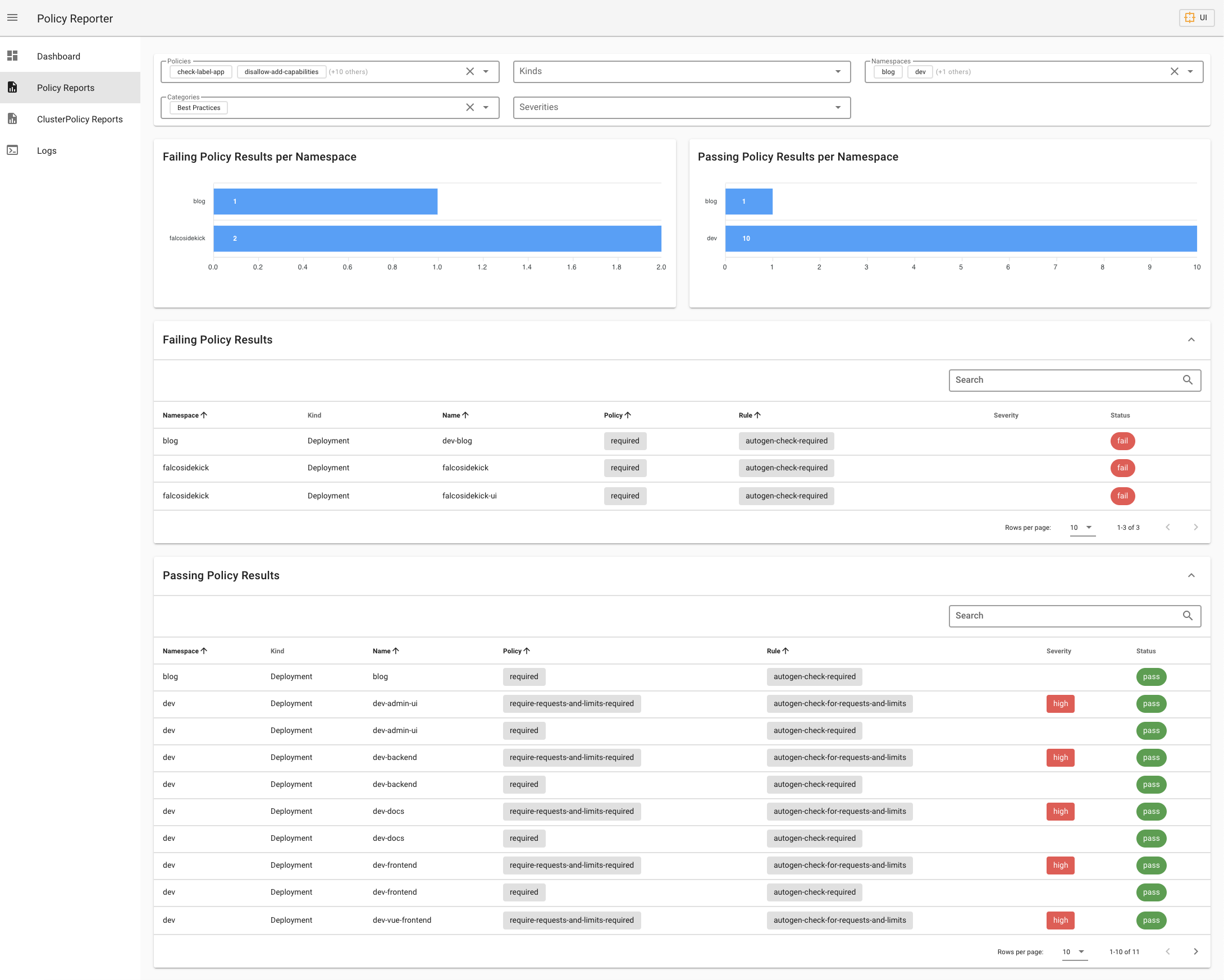

There is also additional project Kyverno Policy Reporter deployable with separate helm chart. This solution allows you to review a report in user friendly way via web UI.

I also would like to mention a Kyverno Slack Chanel which is good place to get help from Kyverno team members.

5. Pod Security Admission Control

It’s Important to say that with PSP deprecation a new Pod Security Admission Controls will be introduced in upcoming Kubernetes versions. You can review all the feature details in official Kubernetes github repository

6. Conclusion

As you can see removing PSP from Kubernetes will not be a problem and we have really good or actually much better tools to keep Kubernetes clusters secure and easy to manage. As I mentioned my own choice is to use Kyverno as it easy to write, deploy and update policies. Kyverno also allows to really simplify cluster management with policies for resource generation.

That is all for now. I hope you will find this post useful and interesting. If you have any questions or suggestions please don’t hesitate to contact me. I will be happy to answer your questions.

Thank you 🤜🤛

Leave a comment